It can be difficult to determine how generative AI arrives at its output.

On March 27, Anthropic published a blog post that introduced a tool to look inside a large language model to follow its behavior, try to answer questions like what language its model Claude “thinks” in whether the model is planning or predicting one word at a time, and whether AI’s own explanations of its rationale actually reflect what is happening under the hood.

In many cases, the explanation does not match the actual treatment. Claude generates his own explanations for its reasoning, so these explanations can also contain hallucinations.

A ‘microscope’ to ‘AI Biology’

Anthropic published a paper on “Mapping” Claude’s internal structures in May 2024, and its new paper to describe “features” used by a model to connect concepts together follows this work. Anthropic calls its research part of the development of a “microscope” to “AI biology.”

In the first paper, anthropic researchers identified “Functions” associated with “Circuit”, which are trails from Claude’s input into output. The second paper focused on Claude 3.5 Haiku and examined 10 behavior to diagnose how AI arrives at its result. Anthropic found:

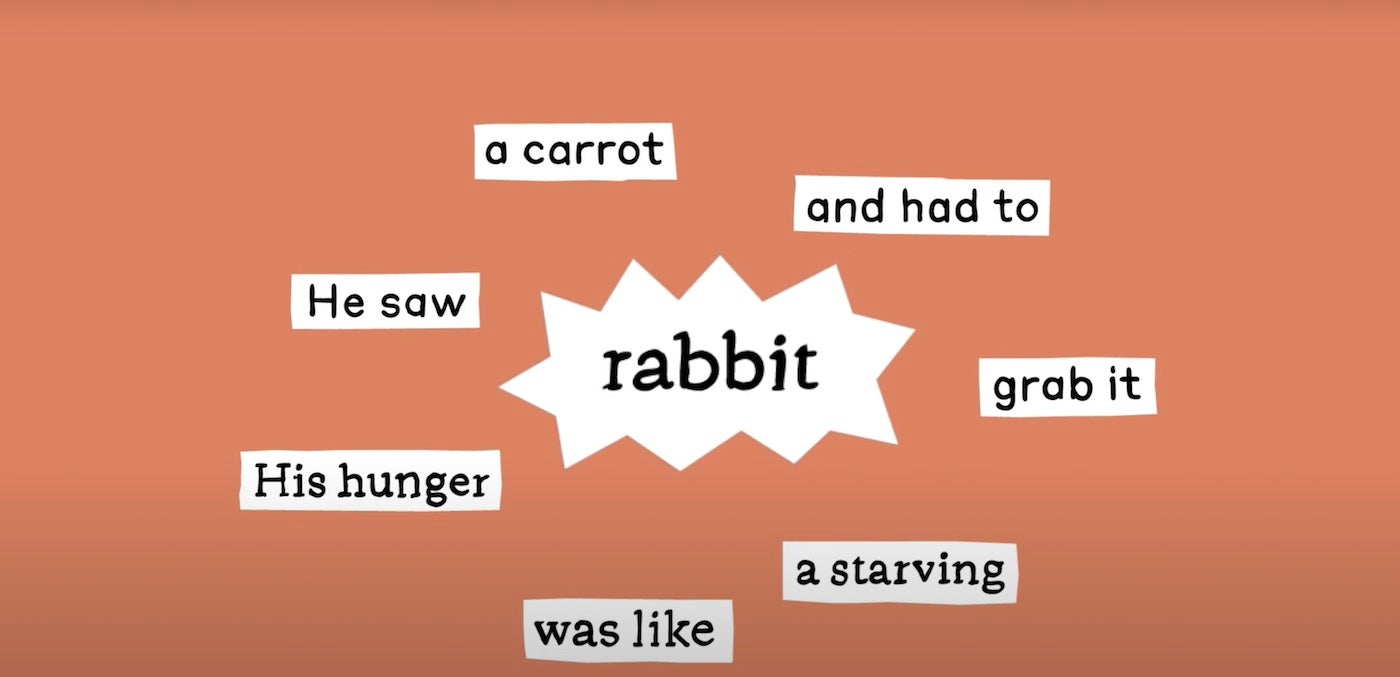

- Claude definitely plans ahead, especially on tasks such as writing rhyming poetry.

- Within the model there is “a conceptual space shared between language.”

- Claude can “pose false reasoning” when it presents its thought process to the user.

The researchers discovered how Claude translates concepts between language by examining the overlap in how AI deals with questions in multiple languages. For example, it is prompt “the opposite of small is” in different languages directed through the same functions to “the concepts of smallness and contradiction.”

This latter point failed with Apollo Research’s studies of Claude Sonnet 3.7’s ability to detect an ethical test. When asked to explain its reasoning, Claude “will give a plausible-sounding argument designed to agree with the user rather than follow logical steps,” found Anthropic.

See: Microsoft’s AI -Cybersecurity offers will debut two people, researcher and analyst, in early April access.

Generative AI is not magic; It is sophisticated computing and it follows rules; However, its black-box nature means that it can be difficult to determine what these rules are and under what conditions they arise. For example, Claude showed a general hesitation of giving speculative answers, but could deal with his final goals faster than it gives output: “In an answer to an example of jailbreak, we found that the model recognized that it had been asked for dangerous information before it was able to gracefully bring the conversation back,” the researchers found.

How does an AI -Trained Word solve mathematical problems?

I mostly use chatgpt for math problems, and the model tends to come up with the right answer despite some hallucinations in the midst of the rationale. So I’ve been wondering about one of Anthropics points: Does the model think of numbers as a kind of letter? Anthropic may have clarified exactly why models behave like this: Claude follows several calculation paths at the same time to solve mathematical problems.

“One path calculates a tough approximation of the answer, and the other focuses on exactly determining the last digit of the sum,” Anthropic wrote.

So it makes sense if the output is right, but the step -by -step explanation is not.

Claude’s first step is to “analyze the structure of the numbers”, to find patterns in the same way as how it would find patterns in letters and words. Claude cannot externally explain this process, just as a human being cannot tell which of their neurons is shooting; Instead, Claude will give an explanation of the way a person would solve the problem. The anthropic scientists speculated that this is because AI is trained in explanations of mathematics written by humans.

What is the next for Anthropics LLM research?

Interpretation of “circuits” can be very difficult due to the density of the generative AIS performance. It took a human a few hours to interpret circuits produced by prompts with “tens of thousands of words,” Anthropic said. They speculate that it may take AI help to interpret how generative AI works.

Anthropic said its LLM research is intended to be sure that AI is in line with human ethics; As such, the company examines real -time surveillance, model improvements and model adjustment.